Research Overview

My research focuses on advancing the fields of virtual reality (VR), augmented reality (AR), and human-computer interaction (HCI). By investigating and developing innovative interaction techniques, enhancing user experiences, and improving navigation in virtual environments, my work aims to push the boundaries of how we interact with digital spaces. I am particularly interested in the perceptual and cognitive aspects of VR and AR interactions, which have significant implications for various applications, including entertainment, education, and industry.

Active Research

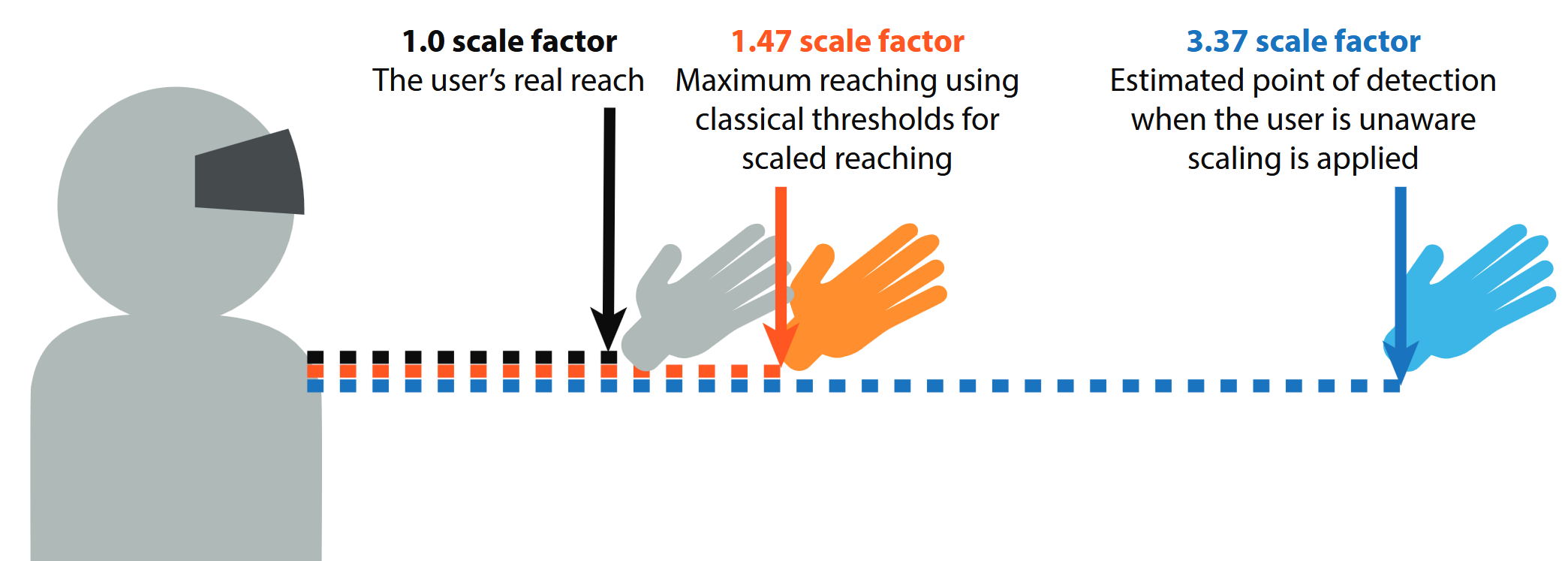

A to-scale image demonstrating the increase in undetectable technique usage when users do not know it is being used. The blue hand represents the average first-detected scale when users are not aware of the technique, while the orange hand represents the threshold derived from classical methods (in which users know about the technique).

Psychophysical Evaluations of Remapped Interaction Techniques in VR

In VR, we are permitted to break the rules of reality and modify parameters of the virtual world to overcome limitations of the physical world via input remapping, or the translation of a user's physical movements into a different set of movements in the virtual world. Current usage of these techniques include (but are not limited to) helping enable reaching for those with low motor abilities, reduce general fatigue due to arm movement in general users, or facilitate natural walking by subtly redirecting a user's movement.

My work focuses on the detection and perception of these technique. In order to maintain natural feeling interactions, we must know how strongly they can be applied before they are noticeable or distracting to users. I also work to develop novel methods of assessing how these techniques are detected and perceived by users who are not aware their movements are manipulated.

Resulting Publications

Strafing Gain: Redirecting Users One Diagonal Step at a Time

Examining Effects of Technique Awarenesson the Detection of Remapped Hands in Virtual Reality

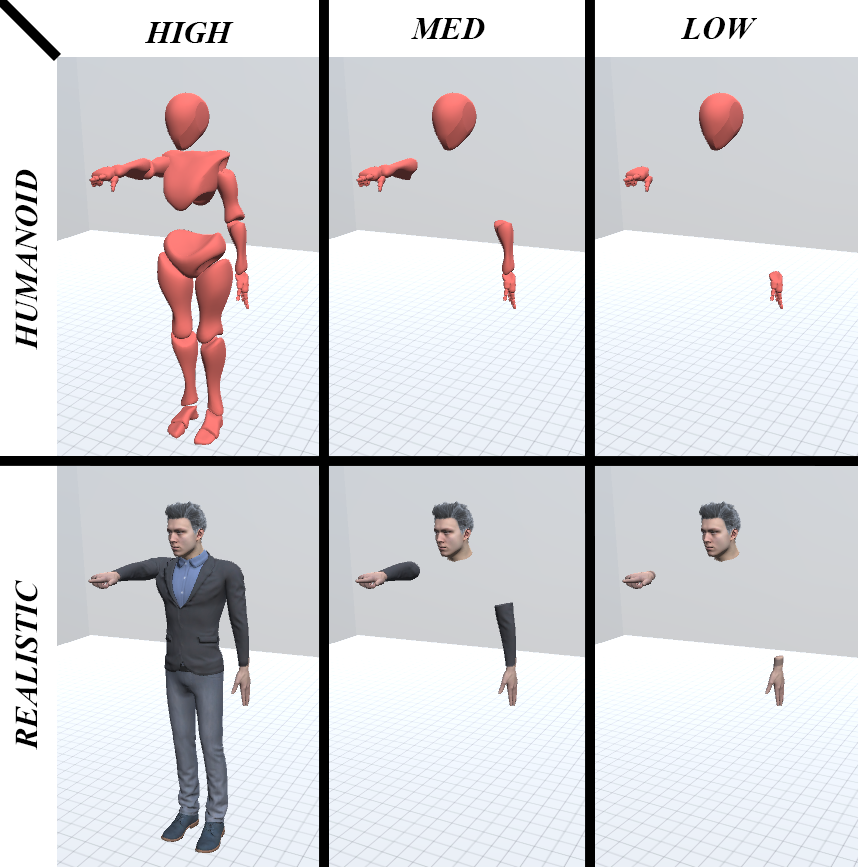

Different styles and visbilities of an avatar in a pointing pose.

Understanding Effects of Avatar Design on Gesture Interpretation

Gestures and pose are often used by humans in the real world to communicate nonverbally. These natural communication forms include waving to greet someone, pointing to specify a location or direction, and the use of a body to convey sentiment (i.e., shrugging to communicate uncertainty). This project aims to examine how avatar design can effect how well users are able to understand gesture-based forms of communication

Resulting Publications

The Effects of Virtual Avatar Visibility on Pointing Interpretation by Observers in 3D Environments

Previous Projects

A simulated ML system where users override or accept an algorithm's output. An explanation in the form of a picture with bounding boxes and textual explanation are provided.

Effects of Initial Explanations on User Trust in Machine Learning

Machine learning algorithms are increasingly being utilized in the fields such as medicine, automation, and policing. Often, they are used to recommend courses of action or identify important features of an input (i.e. possible pedestrians in front of a self-driving car). For end-users, it is important to know why a system determined its output. This is often implemented in the form of an human-friendly explanation understandable by those with domain-specific knowledge, but not prior experience with machine learning. This work seeks to identify how information initially told to users affects their trust in the system.

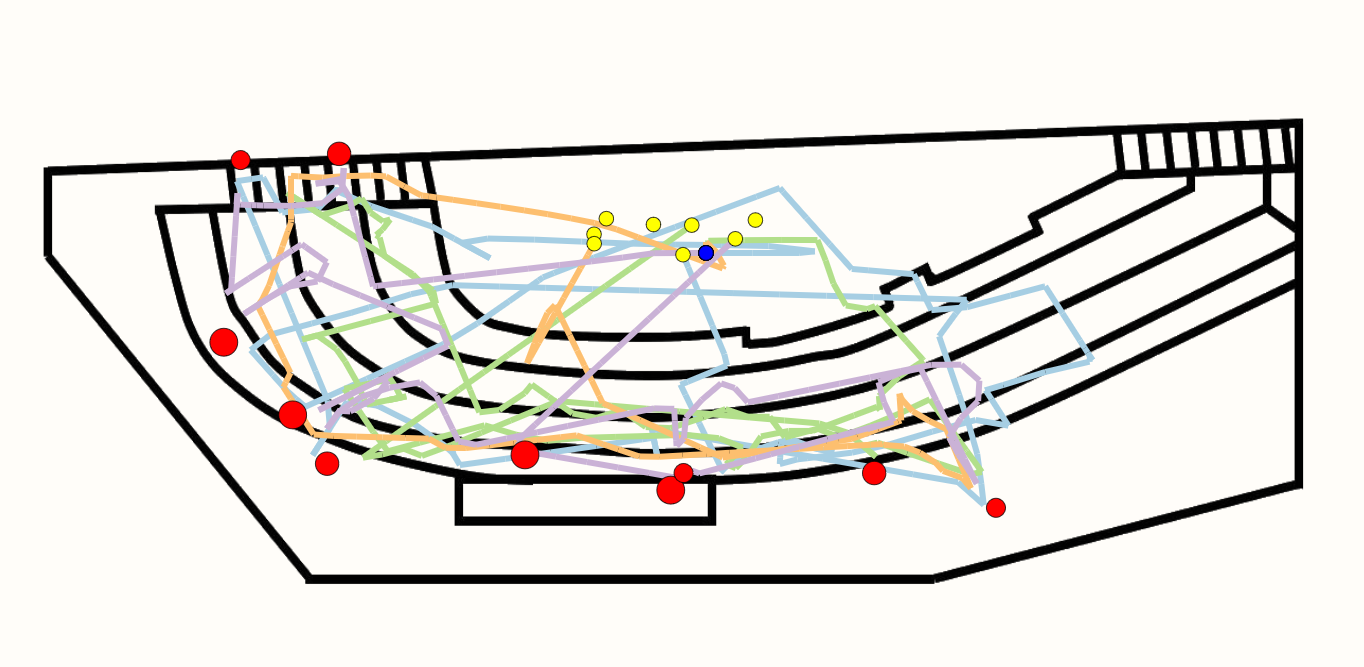

Our developed visualization. Each line represents a different sample flight path. Interactive elements not pictured.

Visualization of Drone Flight Data for Pilot Education

Drones are often used in building inspection tasks where humans cannot easily reach an area to be inspected. This project investigated the presentation of drone flight data in order to train novice drone pilots best practices and strategies for drone piloting during building inspection tasks.

Resulting Publications

InDrone: Visualizing Drone Flight Patterns for Indoor Building Inspection Tasks

InDrone: a 2D-based drone flight behavior visualization platform for indoor building inspection