University of Florida researchers recently concluded the largest study on audio deepfakes to date, challenging 1,200 humans to identify real audio messages from digital fakes.

Humans claimed a 73% accuracy rate but were often fooled by details generated by machines, like British accents and background noises.

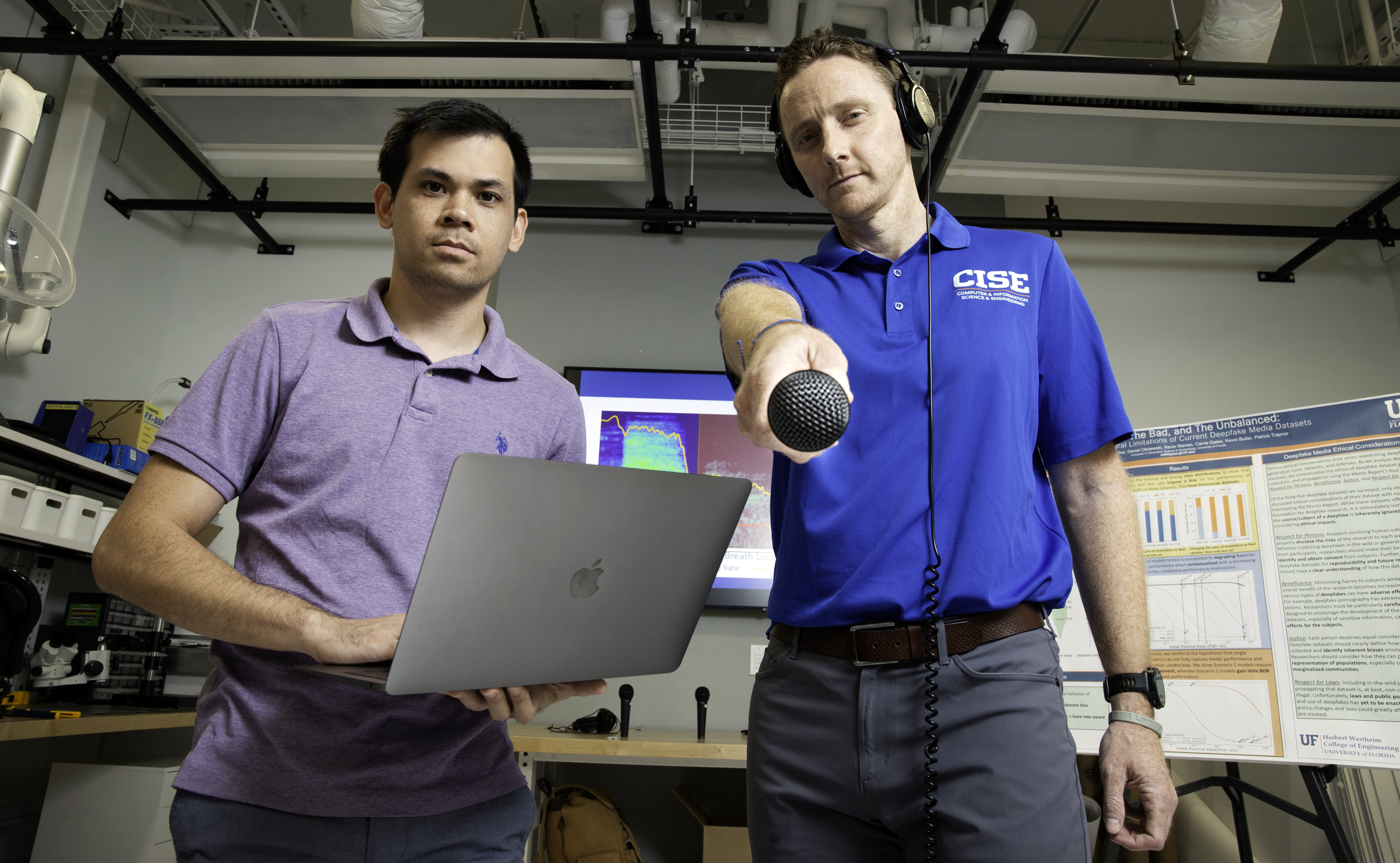

“We found humans weren’t perfect, but they were better than random guessing. They had some intuition behind their responses. That’s why we wanted to go into the deep dive — why are they making those decisions and what are they keying in on,” said co-lead Kevin Warren, a Ph.D. student with the department of Computer & Information Science and Engineering.

The study analyzed how well humans classify deepfake samples, why they make their classification decisions, and how their performance compares to that of machine learning (ML) detectors, noted the authors of the UF paper, “Better Be Computer or I’m Dumb: A Large-Scale Evaluation of Humans as Audio Deepfake Detectors.”

The results ultimately could help develop more effective training and detection models to curb phone scams, misinformation and political interference.

The study’s lead investigator, UF professor and renowned deepfake expert Patrick Traynor, Ph.D., has been ear-deep in deepfake research for years, particularly as the technology grew more sophisticated and dangerous.

In January, Traynor was one of 12 experts and industry leaders invited to the White House to discuss detection tools and solutions. The meeting was called after audio messages in January buzzed phones in New Hampshire with a fake President Joe Biden voice discouraging voting in the primary election.